Statistical analysis consulting firms / company, as a third party to help researchers or students gaining a master’s degree or doctoral degree. It has multiple roles and tasks as well as restrictions of providing services. Why should be some restrictions? Obviously, there is task of consultant and task of student. main idea of the research is student task. The consultant can not replace its task. Otherwise,the main task of consultant is to assist technical tasks. The task as follows:

Data Analytics Consultant Give Consider Methods

Due to lake of statistical analysis knowledge, student need help of data analytics consulting. They can assist a researcher to provide appropriate methodology and research design, rightly. Whether quantitative or qualitative research methods are suitable the research. Prospective research design or retrospective. Is it necessary observational studies, surveys, or even experimental design? Furthermore, they can assist technical task to input the data into computer. In term of research methodology, the consultant has comprehensive overview. He can give alternative view of some research methods and its advantages. Indeed, the researcher has to choose appropriate one.

Statistical Data Analysis Services

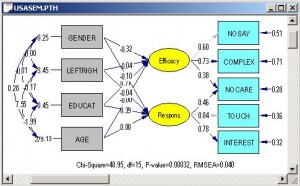

Researchs use consultant when they have to time to running the data. Commonly, they use statistical software such as: SPSS, EVIEW, Amos, PLS, Lisrel. Hence, they would need to technically assist in the work of running data. It should not be lost is that researchers stay understand the processes and procedures of data processing. The reason to choose a particular software and reason to use certain analytical methods. Technical matters related to the running of data can be done by the consultant.

Provide Consultation of Data Analysis

One of the most important in research is to interpret the data. Without interpretation, statistical results only numbers without meaning. Researchers will discuss with data analytics consulting to find any figures. They may explain the data meaning on the software output.

Actually, consultant expected able to help researchers writing better research. Better use appropriate research methodology. Researchers, actually can just use a random method of statistical analysis and statistical software like SPSS and the output would be come.

Secondly, statistical consulting firms / company can give time efficiency to the researchers. Researcher could save time one or two weeks or even months. To learn various methods of research is not an easy thing. Moreover, statistical analysis methods are many kinds. Researchers who do not have background in mathematics or statistics may be difficult to learn strange terminology.

Restrictions of Data Analytics Consulting Task

Eventually, there are some limitations in using the services of consultants.

- Researchers should not use the services of consultants to find main ideas of the research.

- Researchers should not ask for the help of consultants to do plagiarism.

- Researchers should not use consultants to replace the role of researchers.

- Finally, Researchers should not assume that the consultants’ work is theirs